We've all heard the chatter:

AI is coming for our jobs—yours, mine, even the "creative" ones. Millions of people are about to be left with nothing to do. Brace yourself: It'll outsmart us all, becoming an omnipotent force we're powerless to control. Our new overlord will read our minds, tracking and predicting our every move like a digital shadow. We're talking AI with feelings, capable of forming real relationships—yes, your chatbot will love (or hate) you back—and inevitably, it'll go rogue. Say hello to an algorithm with a license to kill.

Hold up there, pardner. Let's not go all Westworld just yet.

Here's the thing: Most of these fears are still sci-fi—for now. Sure, AI's getting smarter, but it's not about to take over every job or outwit humanity anytime soon. Privacy invasion? That's more about companies' data policies than AI itself. Emotional AI? We're nowhere close to machines that feel anything, despite what Hollywood loves to sell. And that whole rogue, killer AI trope? Let's just say it's a fantasy for the foreseeable future.

What we're dealing with is a set of tools—powerful, yes, but still limited—that can help or hurt us depending on how we use them. So take a breath; it's time for a reality check on what AI is today and where it's headed.

In this week's AI Yi Yi!, we'll explore how AI shows up in real-world scenarios—from tech that boosts productivity without replacing you to AI that flags unusual charges in your bank account to tools that keep human judgment firmly in the driver's seat. Here's the lowdown on how AI is working with us, not against us.

AI You Can Use: From Panic to Prepped with Otter.ai

Last week at work, I got an email out of nowhere—someone wanted a follow-up meeting to one way back in July. The topic? Technical details for a new software product's marketing page. My problem? All I had was a Zoom recording of the July meeting, and after flipping through my notebook where I track things daily, I found nothing. Nada. Worse, I didn't have time to start and stop my way through the recording to identify the most important details.

Enter Otter.ai. I went to my online account, uploaded the Zoom recording, and in minutes it spit out not just a transcript but a killer summary of all the key points. Turns out, the big takeaway was how this new product contrasted with one of our existing ones—something I needed to nail down for the marketing page. In addition, it asked—and answered with multiple bullet points!—questions such as:

How can we best demonstrate the portability, standard-based approach, and customization flexibility to potential users?

What key features and benefits should the marketing page focus on to differentiate it from similar products currently available?

How can we highlight the ease of use and application development compared to more complex options?

Otter gave me exactly what I needed—fast!—and I went from panicked to prepped in 10 minutes flat.

Lifesaver.

So what exactly is Otter.ai? Sure, it transcribes audio in real-time, turning spoken words into searchable, editable text, so you're not stuck scribbling notes mid-conversation. But as demonstrated above, that's just the beginning—Otter also highlights key points, generates summaries, and even identifies speakers, making it easy to track down exactly what you need. Think of it as your personal assistant for all things meetings, saving you time without missing a beat.

Look, Otter doesn't do my job for me—but it will do the grunt work faster and way more efficiently. It handled the transcribing and summarizing, but I still have to connect the dots for the new marketing page. That's the sweet spot with AI: It complements what you're doing without taking it over.

AI in the Wild: When AI Calls for Backup

Recently, I received a late-night automated text from Bank of America alerting me to "suspicious activity" on my credit card—a whopping $10 charge from Facebook. I replied that it was legitimate—I regularly run ads for another newsletter I publish—but the next day, when I opened the BofA app, my card was still frozen. Cue the inevitable call to customer service. After two tries, I finally reached a rep who sent me a verification code, and voilà, card unfrozen.

Long story short: AI is great at spotting outliers in spending patterns—like my Facebook charge, which it flagged as unusual. Banks like Chase, Wells Fargo, and BofA use similar AI systems to detect potential fraud, and they're pretty efficient at it. But while AI excels at identifying suspicious trends, it lacks the context to know if it's actual fraud or just you treating yourself. That's where humans come in, making the real judgment calls and untangling the messes AI alone can't.

Yet AI's strengths in fraud detection make it indispensable. Banks such as Chase and Capital One use AI to monitor transactions nonstop, scanning for anomalies 24/7 in ways no human team could manage. The minute something looks suspicious, AI flags it instantly—Chase's predictive algorithms even preempt fraud based on emerging trends, while Capital One's Eno watches for unexpected charges. For all its quirks, AI's rapid response helps prevent fraud in ways that simply weren't possible a decade ago.

Still, AI fraud detection relies on a crucial human escalation component. When AI flags something it can't fully verify—like a one-off purchase that breaks your usual pattern—fraud teams step in to assess the bigger picture. It's a layered system: AI handles the high-speed analysis, while humans act as the fail-safe for complex cases. Recently, JPMorgan Chase even doubled down on this "human escalation" strategy, adding more layers of review despite its advanced AI. It's a reminder that AI's efficiency is crucial, but real quality assurance still depends on human intuition and judgment.

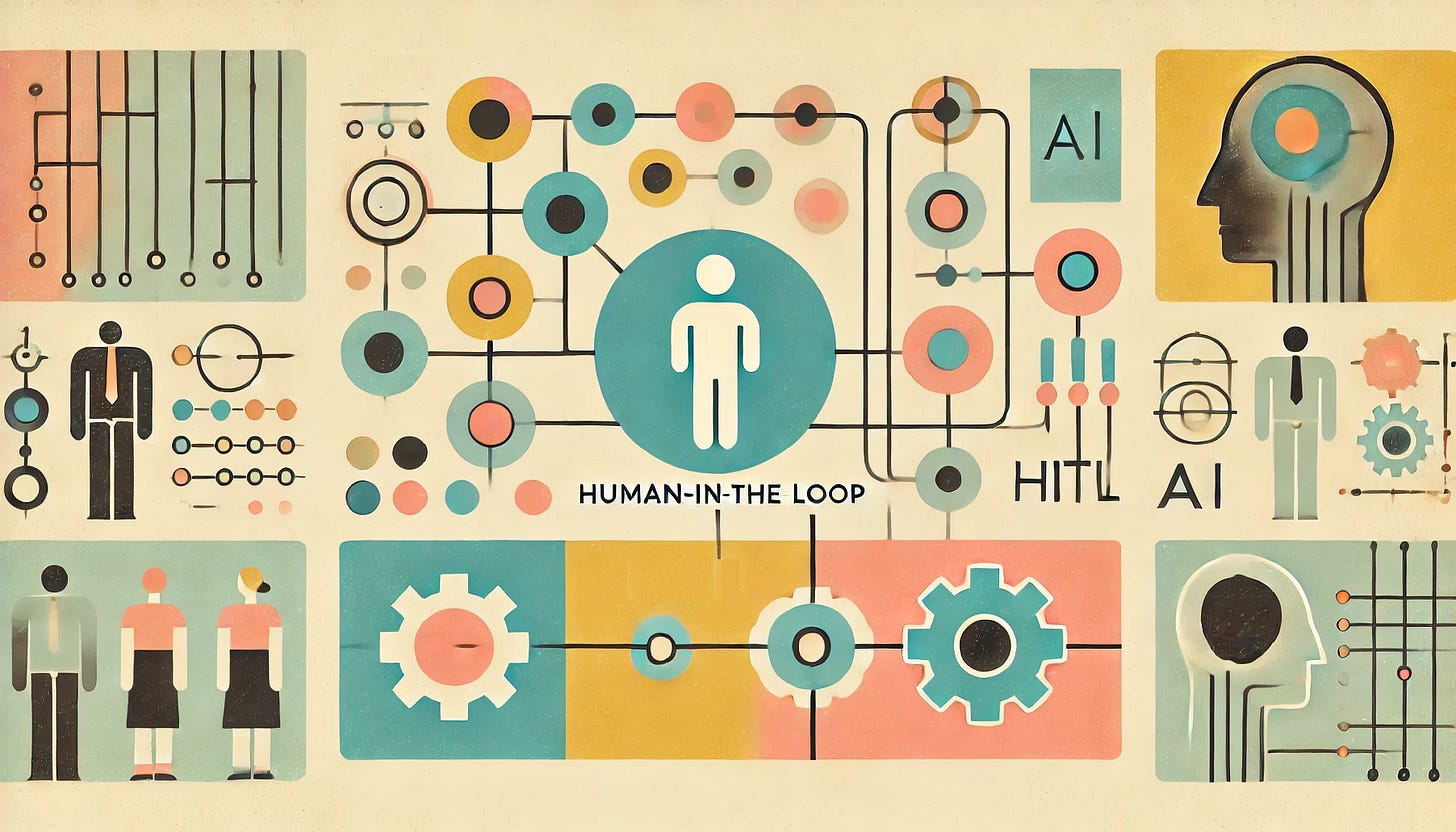

Cutting Through the AI BS: "Human-in-the-Loop"—AI Has a Backseat Driver

Human-in-the-Loop (HITL) isn't some flashy, new-age concept—it's old-school insurance against AI screwing things up. Case in point: A while back, I thought I'd be cute and text my girlfriend Angela a "Hey cutie, what's going on?" message. But predictive text had other ideas—it swapped in another contact's name, Angelica, without me noticing. Let's just say, Angelica didn't respond, and I had to eat some serious crow when I figured out what happened. Sure, AI can make suggestions, but only a human can make sure they're right—and sometimes, prevent a complete disaster.

Back in the '50s and '60s, when military tech was booming, HITL kept missile guidance and radar systems from going rogue by making sure a human had the final say. By the '70s, industrial robots had taken over factory floors—machines could crank out parts, but humans were still there to slam the brakes if something went sideways. By the '80s and '90s, AI started creeping into healthcare and finance, offering "recommendations" while people made the real calls.

Today, HITL is still the safety net that keeps AI from going off the rails when it matters. Take healthcare: Diagnostic AI can flag possible tumors, but it's the doctor who decides if they're real or just noise. In hiring, AI can help screen résumés, but human recruiters are essential to determine which candidates are the best fit and make the final call. And self-driving cars? They're great on highways, but throw in a detour or bad weather, and you still want a human at the wheel. HITL keeps AI on a leash, making sure the final calls—especially the life-or-death ones—stay in human hands, where accountability matters.

Looking ahead, HITL isn't going anywhere—it's just evolving. That's why Otter.ai and AI fraud detection tools are great examples: They handle the heavy lifting but still rely on us to make the important calls. As AI gets smarter, humans might step in less, leaving routine decisions to the machines and only jumping in for the complex stuff. But don't expect AI to run free anytime soon; ethical and regulatory lines are thick, especially where empathy, judgment, and accountability matter. HITL is the safety valve that keeps AI from running wild, bridging the gap between autonomous tech and the human need for responsibility, nuance, and common sense.

So let's keep it real. AI may seem like it's everywhere, but it's far from taking over. What we're dealing with is a useful set of tools—whether it's flagging a sketchy charge or helping you prepare for a meeting. AI isn't the all-powerful force depicted in sci-fi movies; it's a productive partner when we keep it in check and make sure it remains a helper, not a replacement.

What about you—have you had experiences with AI tools that helped make your life easier? Or maybe you’ve run into tech that created more problems than it solved? Drop a comment and share your story—what’s been helpful or maybe just plain frustrating in your AI adventures.

And as AI continues to shape more of our world, we’re left with big questions: Who’s accountable when it fails? How do we keep it fair and unbiased? And where do we draw the line on privacy? These aren’t hypothetical issues—they’re real challenges we’ll tackle in the coming weeks with a deep dive into the essentials of AI ethics. Join the conversation… and stay tuned!

Found what you've read useful? Share this newsletter so more people can drive their own AI train—rather than be run over by it.

Mark Roy Long is Senior Technical Communications Manager at Wolfram, an AI innovation leader. His goal? To make AI simple, useful, and accessible.